Trump posted a fake Taylor Swift image. AI and deepfakes are only going to get worse this election cycle

A patriotic image shows megastar Taylor Swift dressed up like Uncle Sam, falsely suggesting she endorses Republican presidential nominee Donald Trump.

“Taylor Wants You To Vote For Donald Trump,” the image, which appears to be generated by artificial intelligence, says.

Over the weekend, Trump amplified the lie when he shared the image along with others depicting support from Swift fans to his 7.6 million followers on his social network Truth Social.

Deception has long played a part in politics, but the rise of artificial intelligence tools that allow people to rapidly generate fake images or videos by typing out a phrase adds another complex layer to a familiar problem on social media. Known as deepfakes, these digitally altered images and videos can make it appear someone is saying or doing something they aren’t.

As the race between Trump and Democratic nominee Kamala Harris intensifies, disinformation experts are sounding the alarm about generative AI’s risks.

“I’m worried as we move closer to the election, this is going to explode,” said Emilio Ferrara, a computer science professor at USC Viterbi School of Engineering. “It’s going to get much worse than it is now.”

Platforms such as Facebook and X have rules against manipulated images, audio and videos, but they’ve struggled to enforce these policies as AI-generated content floods the internet. Faced with accusations they’re censoring political speech, they’ve focused more on labeling content and fact checking, rather than pulling posts down. And there are exceptions to the rules, such as satire, that allow people to create and share fake images online.

“We have all the problems of the past, all the myths and disagreements and general stupidity, that we’ve been dealing with for 10 years,” said Hany Farid, a UC Berkeley professor who focuses on misinformation and digital forensics. “Now we have it being supercharged with generative AI and we are really, really partisan.”

This controversial California AI bill was amended to quell Silicon Valley fears. Here’s what changed

SB 1047 would require AI firms to share their safety plans with the attorney general upon request and face penalties if catastrophic events happen.

Amid the surging interest in OpenAI, the maker of popular generative AI tool ChatGPT, tech companies are encouraging people to use new AI tools that can generate text, images and videos.

Farid, who analyzed the Swift images that Trump shared, said they appear to be a mix of both real and fake images, a “devious” way to push out misleading content.

People share fake images for various reasons. They might be doing it to just go viral on social media or troll others. Visual imagery is a powerful part of propaganda, warping people’s views on politics including about the legitimacy of the 2024 presidential election, he said.

On X, images that appear to be AI-generated depict Swift hugging Trump, holding his hand or singing a duet as the Republican strums a guitar. Social media users have also used other methods to falsely claim Swift endorsed Trump.

X labeled one video that falsely claimed Swift endorsed Trump as “manipulated media.” The video, posted in February, uses footage of Swift at the 2024 Grammys and makes it appear as if she’s holding a sign that says, “Trump Won. Democrats Cheated!”

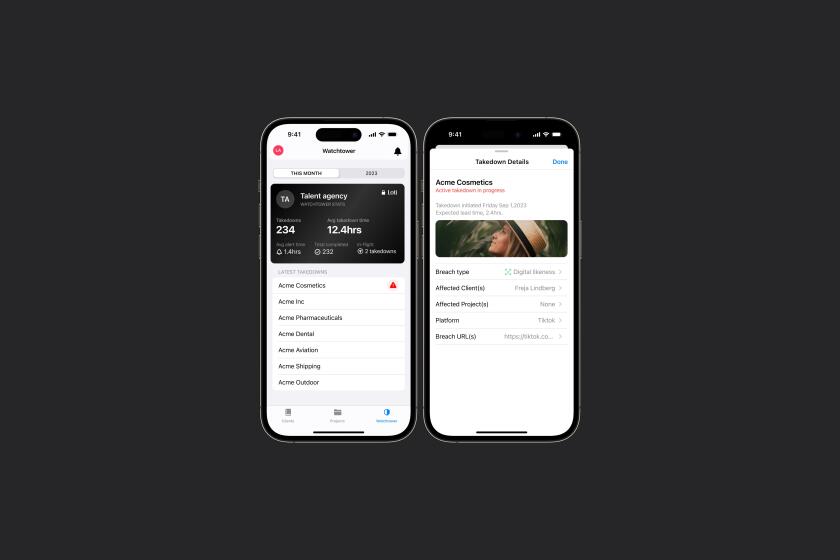

WME is partnering with Seattle-based AI and image recognition company Loti to stop unauthorized digital use of images from WME clients, including deepfakes.

Political campaigns have been bracing for AI’s impact on the election.

Vice President Harris’ campaign has an interdepartmental team “to prepare for the potential effects of AI this election, including the threat of malicious deepfakes,” said spokeswoman Mia Ehrenberg in a statement. The campaign only authorizes the use of AI for “productivity tools” such as data analysis, she added.

Trump’s campaign didn’t respond to a request for comment.

Part of the challenge in curbing fake or manipulated video is that the federal law that guides social media operations doesn’t specifically address deepfakes. The Communications Decency Act of 1996 does not hold social media companies liable for hosting content, as long as they do not aid or control those who posted it.

But over the years, tech companies have come under fire for what’s appeared on their platforms and many social media companies have established content moderation guidelines to address this such as prohibiting hate speech.

“It’s really walking this tightrope for social media companies and online operators,” said Joanna Rosen Forster, a partner at law firm Crowell & Moring.

California lawmakers are trying to get ahead of AI in the workplace, but are already playing catchup

Legislators are working to address this problem by proposing bills that would require social media companies to take down unauthorized deepfakes.

Gov. Gavin Newsom said in July that he supports legislation that would make altering a person’s voice with the use of AI in a campaign ad illegal. The remarks were a response to a video billionaire Elon Musk, who owns X, shared that uses AI to clone Harris’ voice. Musk, who has endorsed Trump, later clarified that the video he shared was parody.

The Screen Actors Guild-American Federation of Television and Radio Artists is one of the groups advocating for laws addressing deepfakes.

Duncan Crabtree-Ireland, SAG-AFTRA’s national executive director and chief negotiator, said social media companies are not doing enough to address the problem.

“Misinformation and outright lies spread by deepfakes can never really be rolled back,” Crabtree-Ireland said. “Especially with elections being decided in many cases by narrow margins and through complex, arcane systems like the electoral college, these deepfake-fueled lies can have devastating real world consequences.”

Crabtree-Ireland has experienced the problem firsthand. Last year, he was the subject of a deepfake video circulating on Instagram during a contract ratification campaign. The video, which showed false imagery of Crabtree-Ireland urging members to vote against a contract he negotiated, got tens of thousands of views. And while it had a caption that said “deepfake,” he received dozens of messages from union members asking him about it.

It took several days before Instagram took the deepfake video down, he said.

“It was, I felt, very abusive,” Crabtree-Ireland said. “They shouldn’t steal my voice and face to make a case that I don’t agree with.”

With a tight race between Harris and Trump, it’s not surprising both candidates are leaning on celebrities to appeal to voters. Harris’ campaign embraced pop star Charli XCX’s depiction of the candidate as “brat” and has used popular tunes such as Beyoncé’s “Freedom” and Chappell Roan’s “Femininomenon” to promote the Democratic Black and Asian American female presidential nominee. Musicians Kid Rock, Jason Aldean and Ye, formerly known as Kanye West, have voiced their support for Trump, who was the target of an assassination attempt in July.

Swift, who has been the target of deepfakes before, hasn’t publicly endorsed a candidate in the 2024 presidential election, but she’s criticized Trump in the past. In the 2020 documentary “Miss Americana,” Swift says in a tearful conversation with her parents and team that she regrets not speaking out against Trump during the 2016 election and slams Tennessee Republican Marsha Blackburn, who was running for U.S. Senate at the time, as “Trump in a wig.”

The seven juiciest revelations from the new Taylor Swift Netflix documentary “Miss Americana,” which dropped at the Sundance Film Festival.

Swift’s publicist, Tree Paine, did not respond to a request for comment.

AI-powered chatbots from platforms such as Meta, X and OpenAI make it easy for people to create fictitious images. While news outlets have found that X’s AI chatbot Grok can generate election fraud images, other chatbots are more restrictive.

Meta AI’s chatbot declined to create images of Swift endorsing Trump after an attempt by a reporter.

“I can’t generate images that could be used to spread misinformation or create the impression that a public figure has endorsed a particular political candidate,” Meta AI’s chatbot replied.

Meta and TikTok cited their efforts to label AI-generated content and partner with fact checkers. For example, TikTok said an AI-generated video falsely depicting a political endorsement of a public figure by an individual or group is not allowed. X didn’t respond to a request for comment.

When asked how Truth Social moderates AI-generated content, the platform’s parent company Trump Media and Technology Group Corp. accused journalists of “demanding more censorship.” Truth Social’s community guidelines has rules against posting fraud and spam but doesn’t spell out how it handles AI-generated content.

With social media platforms facing threats of regulation and lawsuits, some misinformation experts are skeptical that social networks want to properly moderate misleading content.

Social networks make most of their money from ads so keeping users on the platforms for a longer time is “good for business,” Farid said.

“What engages people is the absolute, most conspiratorial, hateful, salacious, angry content,” he said. “That’s who we are as human beings.”

It’s a harsh reality that even Swifties won’t be able to shake off.

Staff writer Mikael Wood contributed to this report.